Google AI Open-Sources Flan-T5: A Transformer-Based mostly Language Mannequin That Makes use of A Textual content-To-Textual content Method For NLP Duties

Massive language fashions, similar to PaLM, Chinchilla, and ChatGPT, have opened up new potentialities in performing pure language processing (NLP) duties from studying instructive cues. The prior artwork has demonstrated that instruction tuning, which includes finetuning language fashions on varied NLP duties organized with directions, additional improves language fashions’ capability to hold out an unknown job given an instruction. By evaluating their finetuning procedures and techniques, They consider the approaches and outcomes of open-sourced instruction generalization initiatives on this paper.

This work focuses on the small print of the instruction tuning strategies, ablating particular person elements and immediately evaluating them. They establish and consider the essential methodological enhancements within the “Flan 2022 Assortment,” which is the time period they use for knowledge assortment and the strategies that apply to the info and instruction tuning course of that focuses on the emergent and state-of-the-art outcomes of mixing Flan 2022 with PaLM 540B. The Flan 2022 Assortment accommodates essentially the most complete assortment of jobs and strategies for instruction tweaking that’s presently publicly obtainable. It has been augmented with hundreds of premium templates and higher formatting patterns.

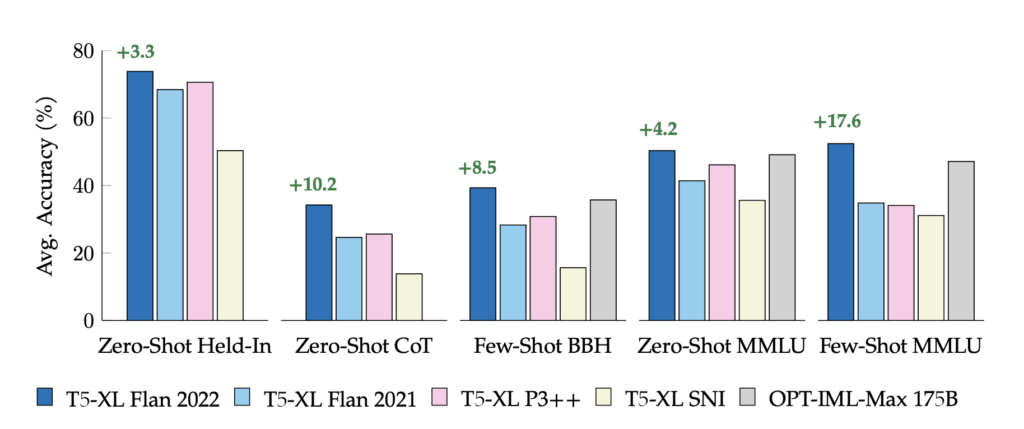

They display that, on all evaluated analysis benchmarks, a mannequin skilled on this assortment outperforms different public collections, together with the unique Flan 2021 their, T0++ their, Tremendous-Pure Directions their, and the modern work on OPT-IML their. This contains, for identically sized fashions, enhancements of 4.2%+ and eight.5% on the MMLU and BIG-Bench Onerous evaluation benchmarks. In keeping with an evaluation of the Flan 2022 method, the strong outcomes are because of the larger and extra assorted assortment of duties and several other simple methods for finetuning and knowledge augmentation. Specifically, coaching on varied situations templated with zero-shot, few-shot, and chain-of-thought prompts improves efficiency in all of those contexts.

As an illustration, a ten% enhance in few-shot prompts improves the outcomes of zero-shot prompting by 2% or extra. Moreover, it has been demonstrated that balancing job sources and enhancing job selection by inverting input-output pairings, as carried out in, are each important to efficiency. In single-task finetuning, the resultant Flan-T5 mannequin converges sooner and performs higher than T5 fashions, indicating that instruction-tuned fashions present a extra computationally efficient start line for subsequent purposes. They anticipate that making these outcomes and instruments overtly accessible will streamline the sources obtainable for instruction tailoring and hasten the event of extra general-purpose language fashions.

The principle contributions of this examine are enumerated as follows: • Methodological: Show that coaching with a mixture of zero- and few-shot cues produce considerably superior leads to each environments. • Measuring and demonstrating the important thing strategies for environment friendly instruction tuning, together with scaling Part 3.3, enhancing job variety utilizing enter inversion, including chain-of-thought coaching knowledge, and balancing varied knowledge sources. • Outcomes: These technical selections enhance held-out job efficiency by 3–17% in comparison with obtainable open-source instruction tuning collections • Findings: Flan-T5 XL offers a extra strong and efficient computational start line for single-task finetuning. • Make the brand new Flan 2022 job assortment, templates, and analysis methodologies obtainable for public use. Supply code is accessible on GitHub.

Take a look at the Paper and Github. Here’s a cool article to be taught extra in regards to the comparability. All Credit score For This Analysis Goes To the Researchers on This Mission. Additionally, don’t overlook to hitch our 13k+ ML SubReddit, Discord Channel, and Email Newsletter, the place we share the most recent AI analysis information, cool AI initiatives, and extra.

Aneesh Tickoo is a consulting intern at MarktechPost. He’s presently pursuing his undergraduate diploma in Knowledge Science and Synthetic Intelligence from the Indian Institute of Know-how(IIT), Bhilai. He spends most of his time engaged on initiatives geared toward harnessing the facility of machine studying. His analysis curiosity is picture processing and is obsessed with constructing options round it. He loves to attach with folks and collaborate on fascinating initiatives.