A information to constructing AI brokers in GxP environments

Healthcare and life sciences organizations are reworking drug discovery, medical gadgets, and affected person care with generative AI brokers. In regulated industries, any system that impacts product high quality or affected person security should adjust to GxP (Good Observe) laws, corresponding to Good Scientific Observe (GxP), Good Laboratory Observe (GLP), Good Manufacturing Observe (GMP). Organizations should exhibit to regulatory authorities that their AI brokers are secure, efficient, and meet high quality requirements. Constructing AI brokers for these GxP environments requires a strategic method that balances innovation, velocity, and regulatory necessities.

AI brokers could be constructed for GxP environments: The important thing lies in understanding tips on how to construct them appropriately based mostly on their danger profiles. Gen AI introduces distinctive challenges round explainability, probabilistic outputs, and steady studying that require considerate danger evaluation quite than blanket validation approaches. The disconnect between conventional GxP compliance strategies and fashionable AI capabilities creates limitations to implementation, will increase validation prices, slows innovation velocity, and limits the potential advantages for product high quality and affected person care.

The regulatory panorama for GxP compliance is evolving to deal with the distinctive traits of AI. Conventional Pc System Validation (CSV) approaches, usually with uniform validation methods, are being supplemented by Pc Software program Assurance (CSA) frameworks that emphasize versatile risk-based validation strategies tailor-made to every system’s precise influence and complexity (FDA latest guidance).

On this submit, we cowl a risk-based implementation, sensible implementation concerns throughout totally different danger ranges, the AWS shared duty mannequin for compliance, and concrete examples of danger mitigation methods.

Threat based mostly implementation framework

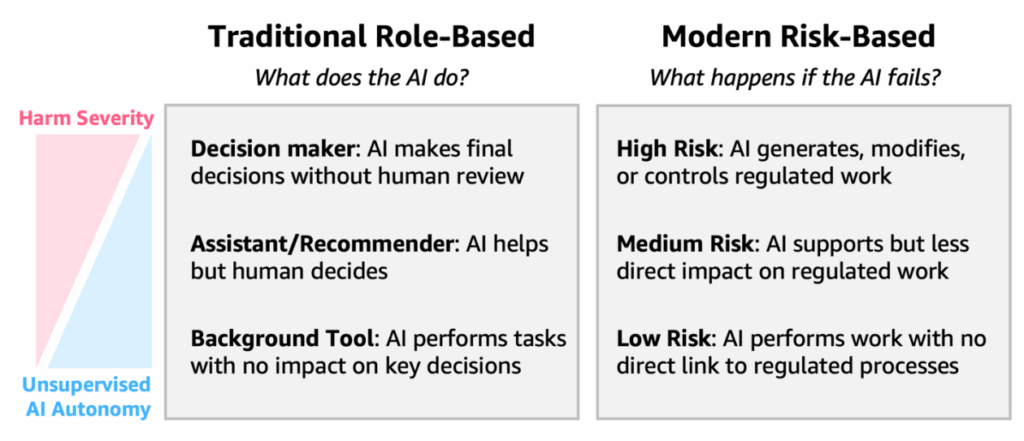

Efficient GxP compliance for agentic AI techniques require assessing danger based mostly on operational context quite than expertise options alone. To assist danger classification, the FDA’s CSA Draft Guidance recommends evaluating meant makes use of throughout three components: severity of potential hurt, chance of incidence, and detectability of failures.

In Determine 1, this evaluation mannequin combines conventional operational roles with fashionable risk-based ranges. Organizations ought to assess how AI brokers perform inside workflows and their potential influence on regulated processes.

Determine 1. GxP compliance for AI brokers combines conventional Position-based with CSA’s fashionable risk-based ranges

The identical AI agent functionality can warrant dramatically totally different validation approaches relying on how it’s being deployed. How is the agentic AI being consumed and inside present GxP processes? What’s the stage of human oversight or human-in-the-loop controls? Is the AI agent itself being added as a further management? What’s the potential influence of AI failures on product high quality, knowledge integrity, or affected person security?

Think about an AI agent for scientific literature evaluate. When creating literature summaries for inside workforce conferences, it presents low danger, requiring minimal controls. When scientists use these insights to information analysis route, it turns into medium danger, needing structured controls, corresponding to human evaluate checkpoints. When supporting regulatory submissions for drug approval, it turns into excessive danger and requires complete controls as a result of outputs straight influence regulatory choices and affected person security.

This risk-based methodology permits organizations to steadiness innovation with compliance by tailoring validation efforts to precise danger ranges quite than making use of uniform controls throughout all AI implementations.

Implementation concerns

Profitable AI agent designs require frequent controls that apply persistently throughout danger ranges for high quality and security. Organizations ought to preserve clear data of AI choices, show knowledge has not been altered, reproduce outcomes when wanted, and handle system updates safely. AWS helps these necessities by means of certified infrastructure and numerous compliance certifications corresponding to ISO, SOC, and NIST. For a extra full checklist, see our Healthcare & Life Sciences Compliance web page. Detailed compliance validation data for Amazon Bedrock AgentCore is offered within the compliance documentation. To implement these controls successfully, organizations can confer with the Nationwide Institute of Requirements and Know-how (NIST) AI Risk Management Framework for AI-risk steering and ALCOA+ rules to advertise knowledge integrity.

Shared duty mannequin

Profitable generative AI cloud-implementation in GxP environments requires understanding the shared division of tasks between clients and AWS, as outlined within the Shared responsibility model, to permit organizations to give attention to delivering efficient and compliance-aligned options.

As AWS helps defend the infrastructure that runs the providers supplied within the AWS Cloud, Desk 1 offers sensible examples of how AWS can assist clients in validating their agentic AI techniques.

| Focus | Buyer tasks | How AWS helps |

| Validation technique | Design risk-appropriate validation approaches utilizing AWS providers for GxP compliance. Set up acceptance standards and validation protocols based mostly on meant use. |

Inherit compliance controls with AWS providers corresponding to Amazon Bedrock’s ISO 27001, SOC 1/2/3, FedRAMP, and GDPR/HIPAA eligibility. Assist your GxP coaching necessities by means of AWS Ability Builder for synthetic intelligence and machine studying (AI/ML) and AWS Licensed Machine Studying – Specialty. Use infrastructure as code by means of AWS CloudFormation to assist on demand validations and deployments that present repeatable IQ to your agentic workloads. |

| GxP procedures | Develop SOPs that combine AWS capabilities with present high quality administration techniques. Set up documented procedures for system operation and upkeep. |

Construct GxP agentic techniques with HCLS Touchdown Zones, designed to align for extremely regulated workloads, this functionality can increase and assist your commonplace process necessities. Increase danger administration procedures with Amazon Bedrock AgentCore supporting end-to-end visibility and runtime necessities for complicated multi-step duties. Use AWS Licensed SysOps Administrator and AWS Licensed DevOps Engineering certifications for coaching necessities and to ensure groups can operationalize and govern procedural compliance on AWS. |

| Consumer administration | Configure IAM roles and permissions aligned with GxP person entry necessities. Keep person entry documentation and coaching data. | Safe AI brokers entry with AWS IAM and Amazon Bedrock AgentCore Identification to determine fine-grained permissions and enterprise identification integration and use IAM Identification Middle to streamline workforce person entry. |

| Efficiency standards | Outline acceptance standards and monitoring thresholds for gen AI purposes. Set up efficiency monitoring protocols. |

Use Amazon Bedrock Provision Throughput plan for agentic workflows that require constant and assured efficiency necessities. Monitor efficiency with Amazon Bedrock AgentCore Observability and with Amazon CloudWatch with customizable alerts and dashboards for end-to-end visibility. |

| Documentation | Create validation documentation demonstrating how AWS providers assist GxP compliance. Keep high quality system data. | Use AWS Config to assist generate compliance stories of your agentic deployments with conformance packs for HIPAA, 21 CFR Half 11, and GxP EU Annex 11.Retailer your GxP knowledge with Amazon Easy Storage Service (Amazon S3), which presents enterprise-grade 11 nines of sturdiness with assist for versioning and person outlined retention insurance policies. |

| Provenance | Monitor mannequin variations whereas sustaining validated snapshots. Model-control immediate templates to facilitate constant AI interactions, monitor adjustments, and preserve data for audit trails version-control immediate templates. Lock device dependencies in validated setting. |

Management fashions and knowledge with Amazon Bedrock configurable knowledge residency and immutable mannequin versioning. AWS Config executes automated configuration monitoring and validation. AWS CloudTrail captures complete audit logging. Deploy reproducibility of AI behaviors utilizing mannequin versioning in AWS CodePipeline, AWS CodeCommit, and Amazon Bedrock. |

The next is an instance of what clients would possibly must implement and what AWS offers when constructing AI brokers (Determine 2):

Determine 2. Gen AI implementation in GxP environments requires understanding the division of tasks between clients and AWS.

Let’s exhibit how these shared tasks translate into precise implementation.

Provenance and reproducibility

AWS Helps the next:

- Amazon Bedrock – Gives immutable mannequin versioning, facilitating reproducible AI conduct throughout the system lifecycle.

- AWS Config – Mechanically tracks and validates system configurations, constantly monitoring for drift from validated baselines.

- AWS CloudTrail – Generates audit trails with cryptographic integrity, capturing mannequin invocations with full metadata together with timestamps, person identities, and mannequin variations. Infrastructure as Code assist by means of AWS CloudFormation allows version-controlled, repeatable deployments.

Buyer duty: Organizations should version-control their infrastructure deployments, their immediate templates to ensure there’s constant AI conduct and preserve audit trails of immediate adjustments. Device dependencies have to be tracked and locked to particular variations in validated environments to assist forestall unintended updates that would have an effect on AI outputs.

Observability and efficiency metrics

AWS helps the next:

- Amazon Bedrock AgentCore – Gives a complete answer for the distinctive dangers that agentic AI introduces, together with end-to-end visibility into complicated multi-step agent duties and runtime necessities for orchestrating reasoning chains. Amazon Bedrock AgentCore Observability captures the whole chain of choices and gear invocations, with the intention to examine an agent’s execution path, audit intermediate outputs, and examine failures. The Bedrock Retrieval API for Amazon Bedrock Knowledge Bases allows traceability from retrieved paperwork to AI-generated outputs.

- Amazon CloudWatch – Delivers real-time monitoring with customizable alerts and dashboards, aggregating efficiency metrics throughout the agent invocations. Organizations can configure logging ranges based mostly on danger, corresponding to primary CloudTrail logging for low-risk purposes, detailed AgentCore traces for medium danger, and full provenance chains for high-risk regulatory submissions.

Buyer duty: Organizations outline acceptance standards and monitoring thresholds applicable to their danger stage—for instance, quotation accuracy necessities for our literature evaluate agent. Groups should resolve when human-in-the-loop triggers are required, corresponding to necessary professional evaluate earlier than AI suggestions affect analysis choices or regulatory submissions.

Consumer administration, session isolation, and safety

AWS Helps the next:

- Amazon Bedrock AgentCore – Gives session isolation utilizing devoted microVMs, that assist forestall cross-contamination between totally different tasks or regulatory submissions. The service helps VPC endpoints to determine non-public connections between your Amazon VPC and Amazon Bedrock AgentCore assets, permitting for inter-network visitors privateness. All communication with Amazon Bedrock AgentCore endpoints makes use of HTTPS solely throughout all supported areas, with no HTTP assist, so that each one communications are digitally signed for authentication and integrity.

Amazon Bedrock AgentCore maintains sturdy encryption requirements with TLS 1.2 minimal necessities (TLS 1.3 really useful) for all API endpoints. Each management aircraft and knowledge aircraft visitors are encrypted with TLS protocols and restricted to minimal TLS 1.2 with no unencrypted communication permitted. Amazon Bedrock AgentCore Identification addresses identification complexity with a safe token vault for credentials administration, offering fine-grained permissions and enterprise identification integration.

AWS Identity and Access Management (IAM) allows organizations to configure role-based entry controls with least-privilege rules. Constructed-in encryption facilitates knowledge safety each in transit and at relaxation, whereas community isolation and compliance certifications (SOC, ISO 27001, HIPAA) assist regulatory necessities. Amazon Bedrock presents configurable knowledge residency, permitting organizations to specify areas for knowledge processing.

Buyer duty: Organizations configure IAM roles and insurance policies aligned with GxP person entry necessities, facilitating least-privilege entry and correct segregation of duties. Entry controls have to be documented and maintained as a part of the standard administration system.

GxP controls for AI brokers

The implementation of GxP danger controls for AI brokers could be thought-about by means of three key phases.

Threat Evaluation evaluates the GxP workload towards the group’s risk-based validation framework. Continuous high quality assurance is maintained by means of structured suggestions loops, starting from real-time verification (see Steady Validation) to bi-annual opinions. This course of makes positive reviewers are educated towards the evolving AI panorama, adapt to person suggestions, and apply applicable intervention standards. In follow, danger assessments outline danger classes and triggers for reassessment.

Management Choice is rigorously deciding on minimal required controls based mostly on the 1. danger classification, 2. the precise design attributes, and three. operational context of the AI brokers. This focused, risk-adjusted method, makes positive controls align with each technical necessities and compliance goals. In follow, danger classes drive required and selectable controls. An instance of medium danger would possibly require Agent and Immediate Governance controls together with two or extra Detective Controls, whereas a excessive danger would possibly require Conventional Testing (IQ, OQ, PQ) management, and two extra corrective controls.

Steady Validation is an method that features the standard fit-for-intended-use validation and subsequent course of that leverages real-world knowledge (RWD), corresponding to operational logs and/or person suggestions, to create supplemental real-world proof (RWE) that the system maintains a validated state. As a management mechanism itself, the Steady Validation method helps tackle fashionable cloud-based designs together with SaaS fashions, mannequin drifts, and evolving cloud infrastructure. Via ongoing monitoring of efficiency and performance, this method helps preserve system GxP compliance whereas supporting regulatory inspections. In follow, for low-risk classes, this is perhaps a person compliance-aligned portal that tracks person difficulty traits to high-risk techniques that incorporate periodic self-tests with compliance stories.

The next desk offers examples of Preventive, Corrective, and Detective Controls for agentic AI techniques that could possibly be integrated in a contemporary GxP validation framework.

| Management factor | Supporting AWS providers |

| Preventive Controls | |

| Agent Conduct Specification | Use Amazon Bedrock Mannequin Catalog to seek out the fashions that assist meet your particular necessities and use AWS service quotas (limits) and documentation on service options to outline supported and verifiable agent capabilities. |

| Menace Modeling | Use AWS Effectively-Architected Framework (Safety Pillar) instruments and AWS service safety documentation to proactively determine AI-specific threats like Immediate Injection, Knowledge Poisoning, and Mannequin Inversion, and assist design preventive mitigations utilizing AWS providers. |

| Response Content material and Relevance Management | Use Amazon Bedrock Guardrails to implement real-time security insurance policies for big language fashions (LLMs) to disclaim dangerous inputs or responses. Guardrails may outline denylists and filter for PII. Use Amazon Bedrock Data Bases or AWS purpose-built vector databases for RAG to offer managed, present, and related data to assist forestall factual drift. |

| Bias Mitigation in Datasets | Amazon SageMaker Make clear offers instruments to run pre-training bias evaluation of your datasets. For brokers, this helps ensure that the foundational knowledge doesn’t result in biased decision-making paths or device utilization. |

| Agent & Immediate Governance | Amazon Bedrock brokers and immediate administration options assist lifecycle processes together with creation, analysis, versioning, and optimization. The options additionally assist superior immediate templates, content material filters, automated reasoning checks, and integration with Amazon Bedrock Flows for safer and managed agentic workflows. |

| Configuration Administration | AWS offers an trade main suite of configuration administration providers corresponding to AWS Config and AWS Audit Supervisor, which can be utilized to constantly validate agentic GxP system configurations. AWS SageMaker Mannequin Registry manages and variations educated machine studying (ML) fashions for managed deployments. |

| Safe AI Improvement | Amazon Q Developer and Amazon Kiro present AI-powered code help that incorporate safety greatest practices and AWS Effectively-Architected principals for constructing and sustaining agentic workloads securely from the beginning. |

| AI Brokers as Secondary Controls | Use Amazon Bedrock AgentCore and your knowledge to rapidly incorporate AI brokers into present GxP workflows as secondary preventative controls so as to add capabilities like pattern evaluation, automated inspections, and techniques circulate evaluation that may set off preventative workflow occasions. |

| Detective Controls | |

| Conventional Testing (IQ, OQ, PQ) | Use AWS Config and AWS CloudFormation for IQ validation by monitoring useful resource deployment configurations. Use AWS CloudTrail and AWS CloudWatch for sourcing occasions, metrics, and log check outcomes for OQ/PQ validation. |

| Explainability Audits & Trajectory Opinions | Amazon SageMaker Make clear generates explainability stories for customized fashions. Amazon Bedrock Invocation Logs can be utilized to evaluate reasoning or chain of thought to seek out flaws in an agent’s logic. Make the most of Amazon AgentCore Observability to have a look at agent invocation classes, traces and spans. |

| Mannequin & I/O Drift Detection | For customized fashions, Amazon SageMaker Mannequin Monitor, can detect drift in knowledge and mannequin high quality. For AI brokers utilizing industrial LLMs, use the observability service of Amazon Bedrock AgentCore to design monitoring of Inputs (prompts) and Outputs (responses) to detect idea drift. Use Amazon CloudWatch alarms to handle compliance notifications. |

| Efficiency Monitoring | Agentic workloads can use Amazon Bedrock metrics, AgentCore Observability and AWS CloudWatch metrics to incorporate monitoring for Token Utilization, Price per Interplay, and Device Execution Latency to detect efficiency and price anomalies. |

| Log and Occasion Monitoring (SIEM) | For agentic workload, Amazon GuardDuty offers clever menace detection that analyzes Amazon Bedrock API calls to detect anomalous or doubtlessly malicious use of the agent or LLMs. |

| Code & Mannequin Threat Scanning | Amazon CodeGuru and Amazon Inspector scans agent code and operational setting for vulnerabilities. These instruments can’t assess mannequin weights for danger, nonetheless AWS does present Amazon SageMaker Mannequin Card assist that can be utilized to construct Mannequin Threat scanning controls. |

| Adversarial Testing (Pink Teaming) & Critic/Grader Mannequin | The analysis instruments of Amazon Bedrock assist assess mannequin health. Amazon Bedrock helps main mannequin suppliers permitting GxP techniques to make use of a number of fashions for secondary and tertiary validation. |

| Inside Audits | AWS Audit Supervisor automates the gathering of proof for compliance and audits and AWS CloudTrail offers a streamlined technique to evaluate agent actions and facilitate procedural adherence. |

| Corrective Controls | |

| Mannequin & Immediate Rollback | Use AWS CodePipeline and AWS CloudFormation to rapidly revert to a earlier, known-good model of a mannequin or Immediate Template when an issue is detected. |

| System Fallback | AWS Step Features can assist orchestrate a fallback to a streamlined, extra constrained mannequin or a human-only workflow if the first agent fails. |

| Human-in-the-Loop & Escalation Administration | AWS Step Features, Amazon Easy Notification Service (SNS) and Amazon Bedrock Immediate Movement can orchestrate workflows that may pause and watch for human approval, together with dynamic approvals based mostly on low agent confidence scores or detected anomalies. |

| CAPA Course of | AWS Techniques Supervisor OpsCenter offers a central place to handle operational points, which can be utilized to trace the foundation trigger evaluation of an agent’s failure. |

| Incident Response Plan | AWS Safety Hub and AWS Techniques Supervisor Incident Supervisor can automate response plans for AI safety incidents (for instance, main jailbreak and knowledge leakage) and supply a central dashboard to handle them. |

| Catastrophe Restoration Plan (DRP) | AWS Elastic Catastrophe Restoration (DRS) and AWS Backup offers instruments to copy and get well all the AI software stack, together with deploying to totally different AWS Areas. |

Conclusion

Healthcare and life sciences organizations can construct GxP-compliant AI brokers by adopting a risk-based framework that balances innovation with regulatory necessities. Success requires correct danger classification, scaled controls matching system influence, and understanding the AWS shared responsibility model. AWS offers certified infrastructure and complete providers, whereas organizations configure applicable controls, preserve model administration, and implement danger mitigation methods tailor-made to their validation wants.

We encourage organizations to discover constructing GxP-compliant AI brokers with AWS providers. For extra details about implementing compliance-aligned AI techniques in regulated environments, contact your AWS account workforce or go to our Healthcare and Life Sciences Solutions page.

Concerning the authors

Pierre de Malliard is a Senior AI/ML Options Architect at Amazon Internet Providers and helps clients within the Healthcare and Life Sciences Business.

Pierre de Malliard is a Senior AI/ML Options Architect at Amazon Internet Providers and helps clients within the Healthcare and Life Sciences Business.

Ian Sutcliffe is a World Answer Architect with 25+ years of expertise in IT, primarily within the Life Sciences Business. A thought chief within the space of regulated cloud computing, one in every of his areas of focus is IT working fashions and course of optimization and automation with the intent of serving to clients develop into Regulated Cloud Natives

Ian Sutcliffe is a World Answer Architect with 25+ years of expertise in IT, primarily within the Life Sciences Business. A thought chief within the space of regulated cloud computing, one in every of his areas of focus is IT working fashions and course of optimization and automation with the intent of serving to clients develop into Regulated Cloud Natives

Kristin Ambrosini is a Generative AI Specialist at Amazon Internet Providers. She drives adoption of scalable GenAI options throughout healthcare and life sciences to rework drug discovery and enhance affected person outcomes. Kristin blends scientific experience, technical acumen, and enterprise technique. She holds a Ph.D. in Organic Sciences.

Kristin Ambrosini is a Generative AI Specialist at Amazon Internet Providers. She drives adoption of scalable GenAI options throughout healthcare and life sciences to rework drug discovery and enhance affected person outcomes. Kristin blends scientific experience, technical acumen, and enterprise technique. She holds a Ph.D. in Organic Sciences.

Ben Xavier is a MedTech Specialist with over 25 years of expertise in Medical Gadget R&D. He’s a passionate chief centered on modernizing the MedTech trade by means of expertise and greatest practices to speed up innovation and enhance affected person outcomes.

Ben Xavier is a MedTech Specialist with over 25 years of expertise in Medical Gadget R&D. He’s a passionate chief centered on modernizing the MedTech trade by means of expertise and greatest practices to speed up innovation and enhance affected person outcomes.