Generate inventive promoting utilizing generative AI deployed on Amazon SageMaker

Inventive promoting has the potential to be revolutionized by generative AI (GenAI). Now you can create a large variation of novel photographs, similar to product photographs, by retraining a GenAI mannequin and offering just a few inputs into the mannequin, similar to textual prompts (sentences describing the scene and objects to be produced by the mannequin). This system has proven promising outcomes beginning in 2022 with the explosion of a brand new class of basis fashions (FMs) known as latent diffusion fashions similar to Stable Diffusion, Midjourney, and Dall-E-2. Nonetheless, to make use of these fashions in manufacturing, the technology course of requires fixed refining to generate constant outputs. This usually means creating numerous pattern photographs of the product and intelligent immediate engineering, which makes the duty troublesome at scale.

On this submit, we discover how this transformative know-how could be harnessed to generate charming and revolutionary ads at scale, particularly when coping with giant catalogs of photographs. Through the use of the facility of GenAI, particularly via the strategy of inpainting, we are able to seamlessly create picture backgrounds, leading to visually beautiful and interesting content material and decreasing undesirable picture artifacts (termed mannequin hallucinations). We additionally delve into the sensible implementation of this system by using Amazon SageMaker endpoints, which allow environment friendly deployment of the GenAI fashions driving this inventive course of.

We use inpainting as the important thing approach inside GenAI-based picture technology as a result of it provides a robust resolution for changing lacking components in photographs. Nonetheless, this presents sure challenges. For example, exact management over the positioning of objects inside the picture could be restricted, resulting in potential points similar to picture artifacts, floating objects, or unblended boundaries, as proven within the following instance photographs.

To beat this, we suggest on this submit to strike a stability between inventive freedom and environment friendly manufacturing by producing a mess of sensible photographs utilizing minimal supervision. To scale the proposed resolution for manufacturing and streamline the deployment of AI fashions within the AWS surroundings, we show it utilizing SageMaker endpoints.

Particularly, we suggest to separate the inpainting course of as a set of layers, every one doubtlessly with a distinct set of prompts. The method could be summarized as the next steps:

- First, we immediate for a common scene (for instance, “park with bushes within the again”) and randomly place the item on that background.

- Subsequent, we add a layer within the decrease mid-section of the item by prompting the place the item lies (for instance, “picnic on grass, or wood desk”).

- Lastly, we add a layer just like the background layer on the higher mid-section of the item utilizing the identical immediate because the background.

The advantage of this course of is the advance within the realism of the item as a result of it’s perceived with higher scaling and positioning relative to the background surroundings that matches with human expectations. The next determine reveals the steps of the proposed resolution.

Answer overview

To perform the duties, the next movement of the information is taken into account:

- Segment Anything Model (SAM) and Stable Diffusion Inpainting fashions are hosted in SageMaker endpoints.

- A background immediate is used to create a generated background picture utilizing the Steady Diffusion mannequin

- A base product picture is handed via SAM to generate a masks. The inverse of the masks is named the anti-mask.

- The generated background picture, masks, together with foreground prompts and adverse prompts are used as enter to the Steady Diffusion Inpainting mannequin to generate a generated intermediate background picture.

- Equally, the generated background picture, anti-mask, together with foreground prompts and adverse prompts are used as enter to the Steady Diffusion Inpainting mannequin to generate a generated intermediate foreground picture.

- The ultimate output of the generated product picture is obtained by combining the generated intermediate foreground picture and generated intermediate background picture.

Conditions

We have now developed an AWS CloudFormation template that may create the SageMaker notebooks used to deploy the endpoints and run inference.

You will have an AWS account with AWS Identity and Access Management (IAM) roles that gives entry to the next:

- AWS CloudFormation

- SageMaker

- Though SageMaker endpoints present cases to run ML fashions, as a way to run heavy workloads like generative AI fashions, we use the GPU-enabled SageMaker endpoints. Check with Amazon SageMaker Pricing for extra details about pricing.

- We use the NVIDIA A10G-enabled occasion

ml.g5.2xlargeto host the fashions.

- Amazon Simple Storage Service (Amazon S3)

For extra particulars, try the GitHub repository and the CloudFormation template.

Masks the world of curiosity of the product

On the whole, we have to present a picture of the item that we wish to place and a masks delineating the contour of the item. This may be carried out utilizing instruments similar to Amazon SageMaker Ground Truth. Alternatively, we are able to routinely phase the item utilizing AI instruments similar to Section Something Fashions (SAM), assuming that the item is within the middle of the picture.

Use SAM to generate a masks

With SAM, a sophisticated generative AI approach, we are able to effortlessly generate high-quality masks for numerous objects inside photographs. SAM makes use of deep studying fashions educated on in depth datasets to precisely determine and phase objects of curiosity, offering exact boundaries and pixel-level masks. This breakthrough know-how revolutionizes picture processing workflows by automating the time-consuming and labor-intensive job of manually creating masks. With SAM, companies and people can now quickly generate masks for object recognition, picture modifying, laptop imaginative and prescient duties, and extra, unlocking a world of potentialities for visible evaluation and manipulation.

Host the SAM mannequin on a SageMaker endpoint

We use the pocket book 1_HostGenAIModels.ipynb to create SageMaker endpoints and host the SAM mannequin.

We use the inference code in inference_sam.py and bundle that right into a code.tar.gz file, which we use to create the SageMaker endpoint. The code downloads the SAM mannequin, hosts it on an endpoint, and gives an entry level to run inference and generate output:

SAM_ENDPOINT_NAME = 'sam-pytorch-' + str(datetime.utcnow().strftime('%Y-%m-%d-%H-%M-%S-%f'))

prefix_sam = "SAM/demo-custom-endpoint"

model_data_sam = s3.S3Uploader.add("code.tar.gz", f's3://{bucket}/{prefix_sam}')

model_sam = PyTorchModel(entry_point="inference_sam.py",

model_data=model_data_sam,

framework_version='1.12',

py_version='py38',

function=function,

env={'TS_MAX_RESPONSE_SIZE':'2000000000', 'SAGEMAKER_MODEL_SERVER_TIMEOUT' : '300'},

sagemaker_session=sess,

identify="model-"+SAM_ENDPOINT_NAME)

predictor_sam = model_sam.deploy(initial_instance_count=1,

instance_type=INSTANCE_TYPE,

deserializers=JSONDeserializer(),

endpoint_name=SAM_ENDPOINT_NAME)Invoke the SAM mannequin and generate a masks

The next code is a part of the 2_GenerateInPaintingImages.ipynb pocket book, which is used to run the endpoints and generate outcomes:

raw_image = Picture.open("photographs/speaker.png").convert("RGB")

predictor_sam = PyTorchPredictor(endpoint_name=SAM_ENDPOINT_NAME,

deserializer=JSONDeserializer())

output_array = predictor_sam.predict(raw_image, initial_args={'Settle for': 'software/json'})

mask_image = Picture.fromarray(np.array(output_array).astype(np.uint8))

# save the masks picture utilizing PIL Picture

mask_image.save('photographs/speaker_mask.png')The next determine reveals the ensuing masks obtained from the product picture.

Use inpainting to create a generated picture

By combining the facility of inpainting with the masks generated by SAM and the person’s immediate, we are able to create outstanding generated photographs. Inpainting makes use of superior generative AI methods to intelligently fill within the lacking or masked areas of a picture, seamlessly mixing them with the encircling content material. With the SAM-generated masks as steering and the person’s immediate as a inventive enter, inpainting algorithms can generate visually coherent and contextually acceptable content material, leading to beautiful and personalised photographs. This fusion of applied sciences opens up infinite inventive potentialities, permitting customers to remodel their visions into vivid, charming visible narratives.

Host a Steady Diffusion Inpainting mannequin on a SageMaker endpoint

Equally to 2.1, we use the pocket book 1_HostGenAIModels.ipynb to create SageMaker endpoints and host the Steady Diffusion Inpainting mannequin.

We use the inference code in inference_inpainting.py and bundle that right into a code.tar.gz file, which we use to create the SageMaker endpoint. The code downloads the Steady Diffusion Inpainting mannequin, hosts it on an endpoint, and gives an entry level to run inference and generate output:

INPAINTING_ENDPOINT_NAME = 'inpainting-pytorch-' + str(datetime.utcnow().strftime('%Y-%m-%d-%H-%M-%S-%f'))

prefix_inpainting = "InPainting/demo-custom-endpoint"

model_data_inpainting = s3.S3Uploader.add("code.tar.gz", f"s3://{bucket}/{prefix_inpainting}")

model_inpainting = PyTorchModel(entry_point="inference_inpainting.py",

model_data=model_data_inpainting,

framework_version='1.12',

py_version='py38',

function=function,

env={'TS_MAX_RESPONSE_SIZE':'2000000000', 'SAGEMAKER_MODEL_SERVER_TIMEOUT' : '300'},

sagemaker_session=sess,

identify="model-"+INPAINTING_ENDPOINT_NAME)

predictor_inpainting = model_inpainting.deploy(initial_instance_count=1,

instance_type=INSTANCE_TYPE,

serializer=JSONSerializer(),

deserializers=JSONDeserializer(),

endpoint_name=INPAINTING_ENDPOINT_NAME,

volume_size=128)Invoke the Steady Diffusion Inpainting mannequin and generate a brand new picture

Equally to the step to invoke the SAM mannequin, the pocket book 2_GenerateInPaintingImages.ipynb is used to run the inference on the endpoints and generate outcomes:

raw_image = Picture.open("photographs/speaker.png").convert("RGB")

mask_image = Picture.open('photographs/speaker_mask.png').convert('RGB')

prompt_fr = "desk and chair with books"

prompt_bg = "window and sofa, desk"

negative_prompt = "longbody, lowres, dangerous anatomy, dangerous fingers, lacking fingers, additional digit, fewer digits, cropped, worst high quality, low high quality, letters"

inputs = {}

inputs["image"] = np.array(raw_image)

inputs["mask"] = np.array(mask_image)

inputs["prompt_fr"] = prompt_fr

inputs["prompt_bg"] = prompt_bg

inputs["negative_prompt"] = negative_prompt

predictor_inpainting = PyTorchPredictor(endpoint_name=INPAINTING_ENDPOINT_NAME,

serializer=JSONSerializer(),

deserializer=JSONDeserializer())

output_array = predictor_inpainting.predict(inputs, initial_args={'Settle for': 'software/json'})

gai_image = Picture.fromarray(np.array(output_array[0]).astype(np.uint8))

gai_background = Picture.fromarray(np.array(output_array[1]).astype(np.uint8))

gai_mask = Picture.fromarray(np.array(output_array[2]).astype(np.uint8))

post_image = Picture.fromarray(np.array(output_array[3]).astype(np.uint8))

# save the generated picture utilizing PIL Picture

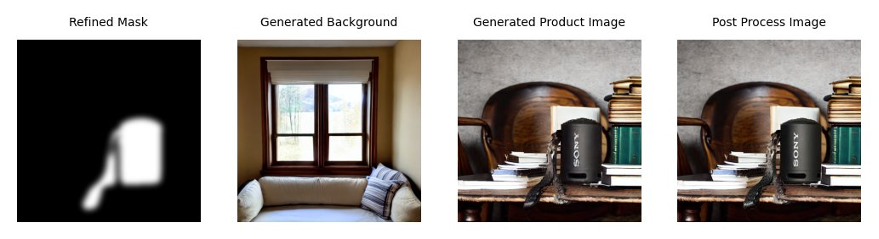

post_image.save('photographs/speaker_generated.png')The next determine reveals the refined masks, generated background, generated product picture, and postprocessed picture.

The generated product picture makes use of the next prompts:

- Background technology – “chair, sofa, window, indoor”

- Inpainting – “moreover books”

Clear up

On this submit, we use two GPU-enabled SageMaker endpoints, which contributes to nearly all of the associated fee. These endpoints must be turned off to keep away from additional price when the endpoints are usually not getting used. We have now offered a pocket book, 3_CleanUp.ipynb, which might help in cleansing up the endpoints. We additionally use a SageMaker pocket book to host the fashions and run inference. Subsequently, it’s good follow to cease the pocket book occasion if it’s not getting used.

Conclusion

Generative AI fashions are typically large-scale ML fashions that require particular assets to run effectively. On this submit, we demonstrated, utilizing an promoting use case, how SageMaker endpoints supply a scalable and managed surroundings for internet hosting generative AI fashions such because the text-to-image basis mannequin Steady Diffusion. We demonstrated how two fashions could be hosted and run as wanted, and multiple models can also be hosted from a single endpoint. This eliminates the complexities related to infrastructure provisioning, scalability, and monitoring, enabling organizations to focus solely on deploying their fashions and serving predictions to unravel their enterprise challenges. With SageMaker endpoints, organizations can effectively deploy and handle a number of fashions inside a unified infrastructure, reaching optimum useful resource utilization and decreasing operational overhead.

The detailed code is obtainable on GitHub. The code demonstrates the usage of AWS CloudFormation and the AWS Cloud Development Kit (AWS CDK) to automate the method of making SageMaker notebooks and different required assets.

Concerning the authors

Fabian Benitez-Quiroz is a IoT Edge Knowledge Scientist in AWS Skilled Companies. He holds a PhD in Laptop Imaginative and prescient and Sample Recognition from The Ohio State College. Fabian is concerned in serving to prospects run their machine studying fashions with low latency on IoT gadgets and within the cloud throughout numerous industries.

Fabian Benitez-Quiroz is a IoT Edge Knowledge Scientist in AWS Skilled Companies. He holds a PhD in Laptop Imaginative and prescient and Sample Recognition from The Ohio State College. Fabian is concerned in serving to prospects run their machine studying fashions with low latency on IoT gadgets and within the cloud throughout numerous industries.

Romil Shah is a Sr. Knowledge Scientist at AWS Skilled Companies. Romil has greater than 6 years of business expertise in laptop imaginative and prescient, machine studying, and IoT edge gadgets. He’s concerned in serving to prospects optimize and deploy their machine studying fashions for edge gadgets and on the cloud. He works with prospects to create methods for optimizing and deploying basis fashions.

Romil Shah is a Sr. Knowledge Scientist at AWS Skilled Companies. Romil has greater than 6 years of business expertise in laptop imaginative and prescient, machine studying, and IoT edge gadgets. He’s concerned in serving to prospects optimize and deploy their machine studying fashions for edge gadgets and on the cloud. He works with prospects to create methods for optimizing and deploying basis fashions.

Han Man is a Senior Knowledge Science & Machine Studying Supervisor with AWS Skilled Companies primarily based in San Diego, CA. He has a PhD in Engineering from Northwestern College and has a number of years of expertise as a administration advisor advising shoppers in manufacturing, monetary companies, and vitality. At the moment, he’s passionately working with key prospects from quite a lot of business verticals to develop and implement ML and GenAI options on AWS.

Han Man is a Senior Knowledge Science & Machine Studying Supervisor with AWS Skilled Companies primarily based in San Diego, CA. He has a PhD in Engineering from Northwestern College and has a number of years of expertise as a administration advisor advising shoppers in manufacturing, monetary companies, and vitality. At the moment, he’s passionately working with key prospects from quite a lot of business verticals to develop and implement ML and GenAI options on AWS.