Is your mannequin good? A deep dive into Amazon SageMaker Canvas superior metrics

If you’re a enterprise analyst, understanding buyer habits might be one of the vital vital belongings you care about. Understanding the explanations and mechanisms behind buyer buy choices can facilitate income development. Nonetheless, the lack of prospects (generally known as buyer churn) at all times poses a danger. Gaining insights into why prospects go away may be simply as essential for sustaining earnings and income.

Though machine studying (ML) can present worthwhile insights, ML specialists have been wanted to construct buyer churn prediction fashions till the introduction of Amazon SageMaker Canvas.

SageMaker Canvas is a low-code/no-code managed service that permits you to create ML fashions that may remedy many enterprise issues with out writing a single line of code. It additionally allows you to consider the fashions utilizing superior metrics as in case you have been an information scientist.

On this publish, we present how a enterprise analyst can consider and perceive a classification churn mannequin created with SageMaker Canvas utilizing the Superior metrics tab. We clarify the metrics and present methods to take care of knowledge to acquire higher mannequin efficiency.

Conditions

If you want to implement all or a number of the duties described on this publish, you want an AWS account with entry to SageMaker Canvas. Confer with Predict customer churn with no-code machine learning using Amazon SageMaker Canvas to cowl the fundamentals round SageMaker Canvas, the churn mannequin, and the dataset.

Introduction to mannequin efficiency analysis

As a normal guideline, when that you must consider the efficiency of a mannequin, you’re attempting to measure how effectively the mannequin will predict one thing when it sees new knowledge. This prediction is known as inference. You begin by coaching the mannequin utilizing present knowledge, after which ask the mannequin to foretell the end result on knowledge that it has not already seen. How precisely the mannequin predicts this final result is what you have a look at to know the mannequin efficiency.

If the mannequin hasn’t seen the brand new knowledge, how would anyone know if the prediction is sweet or unhealthy? Nicely, the thought is to truly use historic knowledge the place the outcomes are already identified and examine the these values to the mannequin’s predicted values. That is enabled by setting apart a portion of the historic coaching knowledge so it may be in contrast with what the mannequin predicts for these values.

Within the instance of buyer churn (which is a categorical classification downside), you begin with a historic dataset that describes prospects with many attributes (one in every document). One of many attributes, referred to as Churn, may be True or False, describing if the client left the service or not. To guage mannequin accuracy, we cut up this dataset and practice the mannequin utilizing one half (the coaching dataset), and ask the mannequin to foretell the end result (classify the client as Churn or not) with the opposite half (the check dataset). We then examine the mannequin’s prediction to the bottom fact contained within the check dataset.

Deciphering superior metrics

On this part, we talk about the superior metrics in SageMaker Canvas that may aid you perceive mannequin efficiency.

Confusion matrix

SageMaker Canvas makes use of confusion matrices that will help you visualize when a mannequin generates predictions accurately. In a confusion matrix, your outcomes are organized to check the anticipated values towards the precise historic (identified) values. The next instance explains how a confusion matrix works for a two-category prediction mannequin that predicts optimistic and unfavorable labels:

- True optimistic – The mannequin accurately predicted optimistic when the true label was optimistic

- True unfavorable – The mannequin accurately predicted unfavorable when the true label was unfavorable

- False optimistic – The mannequin incorrectly predicted optimistic when the true label was unfavorable

- False unfavorable – The mannequin incorrectly predicted unfavorable when the true label was optimistic

The next picture is an instance of a confusion matrix for 2 classes. In our churn mannequin, the precise values come from the check dataset, and the anticipated values come from asking our mannequin.

Accuracy

Accuracy is the share of right predictions out of all of the rows or samples of the check set. It’s the true samples that have been predicted as True, plus the false samples that have been accurately predicted as False, divided by the overall variety of samples within the dataset.

It’s one of the vital vital metrics to know as a result of it should inform you in what proportion the mannequin accurately predicted, however it may be deceptive in some instances. For instance:

- Class imbalance – When the lessons in your dataset usually are not evenly distributed (you might have a disproportionate variety of samples from one class and little or no on others), accuracy may be deceptive. In such instances, even a mannequin that merely predicts the bulk class for each occasion can obtain a excessive accuracy.

- Value-sensitive classification – In some functions, the price of misclassification for various lessons may be totally different. For instance, if we have been predicting if a drug can irritate a situation, a false unfavorable (for instance, predicting the drug may not irritate when it really does) may be extra expensive than a false optimistic (for instance, predicting the drug may irritate when it really doesn’t).

Precision, recall, and F1 rating

Precision is the fraction of true positives (TP) out of all the anticipated positives (TP + FP). It measures the proportion of optimistic predictions which can be really right.

Recall is the fraction of true positives (TP) out of all of the precise positives (TP + FN). It measures the proportion of optimistic situations that have been accurately predicted as optimistic by the mannequin.

The F1 rating combines precision and recall to supply a single rating that balances the trade-off between them. It’s outlined because the harmonic imply of precision and recall:

F1 rating = 2 * (precision * recall) / (precision + recall)

The F1 rating ranges from 0–1, with the next rating indicating higher efficiency. An ideal F1 rating of 1 signifies that the mannequin has achieved each good precision and excellent recall, and a rating of 0 signifies that the mannequin’s predictions are utterly improper.

The F1 rating offers a balanced analysis of the mannequin’s efficiency. It considers precision and recall, offering a extra informative analysis metric that displays the mannequin’s means to accurately classify optimistic situations and keep away from false positives and false negatives.

For instance, in medical prognosis, fraud detection, and sentiment evaluation, F1 is very related. In medical prognosis, precisely figuring out the presence of a particular illness or situation is essential, and false negatives or false positives can have vital penalties. The F1 rating takes into consideration each precision (the flexibility to accurately determine optimistic instances) and recall (the flexibility to search out all optimistic instances), offering a balanced analysis of the mannequin’s efficiency in detecting the illness. Equally, in fraud detection, the place the variety of precise fraud instances is comparatively low in comparison with non-fraudulent instances (imbalanced lessons), accuracy alone could also be deceptive on account of a excessive variety of true negatives. The F1 rating offers a complete measure of the mannequin’s means to detect each fraudulent and non-fraudulent instances, contemplating each precision and recall. And in sentiment evaluation, if the dataset is imbalanced, accuracy might not precisely replicate the mannequin’s efficiency in classifying situations of the optimistic sentiment class.

AUC (space below the curve)

The AUC metric evaluates the flexibility of a binary classification mannequin to tell apart between optimistic and unfavorable lessons in any respect classification thresholds. A threshold is a price utilized by the mannequin to decide between the 2 potential lessons, changing the likelihood of a pattern being a part of a category right into a binary choice. To calculate the AUC, the true optimistic fee (TPR) and false optimistic fee (FPR) are plotted throughout numerous threshold settings. The TPR measures the proportion of true positives out of all precise positives, whereas the FPR measures the proportion of false positives out of all precise negatives. The ensuing curve, referred to as the receiver working attribute (ROC) curve, offers a visible illustration of the TPR and FPR at totally different threshold settings. The AUC worth, which ranges from 0–1, represents the realm below the ROC curve. Greater AUC values point out higher efficiency, with an ideal classifier reaching an AUC of 1.

The next plot reveals the ROC curve, with TPR because the Y axis and FPR because the X axis. The nearer the curve will get to the highest left nook of the plot, the higher the mannequin does at classifying the info into classes.

To make clear, let’s go over an instance. Let’s take into consideration a fraud detection mannequin. Often, these fashions are educated from unbalanced datasets. This is because of the truth that, often, nearly all of the transactions within the dataset are non-fraudulent with just a few labeled as frauds. On this case, the accuracy alone might not adequately seize the efficiency of the mannequin as a result of it’s in all probability closely influenced by the abundance of non-fraudulent instances, resulting in misleadingly excessive accuracy scores.

On this case, the AUC could be a greater metric to evaluate mannequin efficiency as a result of it offers a complete evaluation of a mannequin’s means to tell apart between fraudulent and non-fraudulent transactions. It gives a extra nuanced analysis, considering the trade-off between true optimistic fee and false optimistic fee at numerous classification thresholds.

Identical to the F1 rating, it’s significantly helpful when the dataset is imbalanced. It measures the trade-off between TPR and FPR and reveals how effectively the mannequin can differentiate between the 2 lessons no matter their distribution. Which means that even when one class is considerably smaller than the opposite, the ROC curve assesses the mannequin’s efficiency in a balanced method by contemplating each lessons equally.

Further key subjects

Superior metrics usually are not the one vital instruments out there to you for evaluating and enhancing ML mannequin efficiency. Knowledge preparation, function engineering, and have affect evaluation are methods which can be important to mannequin constructing. These actions play an important position in extracting significant insights from uncooked knowledge and enhancing mannequin efficiency, resulting in extra strong and insightful outcomes.

Knowledge preparation and have engineering

Characteristic engineering is the method of choosing, reworking, and creating new variables (options) from uncooked knowledge, and performs a key position in enhancing the efficiency of an ML mannequin. Choosing essentially the most related variables or options from the out there knowledge includes eradicating irrelevant or redundant options that don’t contribute to the mannequin’s predictive energy. Reworking knowledge options into an appropriate format contains scaling, normalization, and dealing with lacking values. And at last, creating new options from the prevailing knowledge is completed by way of mathematical transformations, combining or interacting totally different options, or creating new options from domain-specific data.

Characteristic significance evaluation

SageMaker Canvas generates a function significance evaluation that explains the affect that every column in your dataset has on the mannequin. While you generate predictions, you possibly can see the column affect that identifies which columns have essentially the most affect on every prediction. This gives you insights on which options should be a part of your closing mannequin and which of them must be discarded. Column affect is a proportion rating that signifies how a lot weight a column has in making predictions in relation to the opposite columns. For a column affect of 25%, Canvas weighs the prediction as 25% for the column and 75% for the opposite columns.

Approaches to enhance mannequin accuracy

Though there are a number of strategies to enhance mannequin accuracy, knowledge scientists and ML practitioners often comply with one of many two approaches mentioned on this part, utilizing the instruments and metrics described earlier.

Mannequin-centric method

On this method, the info at all times stays the identical and is used to iteratively enhance the mannequin to fulfill desired outcomes. Instruments used with this method embody:

- Attempting a number of related ML algorithms

- Algorithm and hyperparameter tuning and optimization

- Completely different mannequin ensemble strategies

- Utilizing pre-trained fashions (SageMaker offers numerous built-in or pre-trained models to assist ML practitioners)

- AutoML, which is what SageMaker Canvas does behind the scenes (utilizing Amazon SageMaker Autopilot), which encompasses the entire above

Knowledge-centric method

On this method, the main target is on knowledge preparation, enhancing knowledge high quality, and iteratively modifying the info to enhance efficiency:

- Exploring statistics of the dataset used to coach the mannequin, also referred to as exploratory knowledge evaluation (EDA)

- Enhancing knowledge high quality (knowledge cleansing, lacking values imputation, outlier detection and administration)

- Characteristic choice

- Characteristic engineering

- Knowledge augmentation

Enhancing mannequin efficiency with Canvas

We start with the data-centric method. We use the mannequin preview performance to carry out an preliminary EDA. This offers us a baseline that we are able to use to carry out knowledge augmentation, producing a brand new baseline, and at last getting the perfect mannequin with a model-centric method utilizing the usual construct performance.

We use the synthetic dataset from a telecommunications cell phone provider. This pattern dataset accommodates 5,000 data, the place every document makes use of 21 attributes to explain the client profile. Confer with Predict customer churn with no-code machine learning using Amazon SageMaker Canvas for a full description.

Mannequin preview in a data-centric method

As a primary step, we open the dataset, choose the column to foretell as Churn?, and generate a preview mannequin by selecting Preview mannequin.

The Preview mannequin pane will present the progress till the preview mannequin is prepared.

When the mannequin is prepared, SageMaker Canvas generates a function significance evaluation.

Lastly, when it’s full, the pane will present a listing of columns with its affect on the mannequin. These are helpful to know how related the options are on our predictions. Column affect is a proportion rating that signifies how a lot weight a column has in making predictions in relation to the opposite columns. Within the following instance, for the Night time Calls column, SageMaker Canvas weights the prediction as 4.04% for the column and 95.9% for the opposite columns. The upper the worth, the upper the affect.

As we are able to see, the preview mannequin has a 95.6% accuracy. Let’s attempt to enhance the mannequin efficiency utilizing a data-centric method. We carry out knowledge preparation and use function engineering methods to enhance efficiency.

As proven within the following screenshot, we are able to observe that the Telephone and State columns have a lot much less affect on our prediction. Subsequently, we’ll use this data as enter for our subsequent section, knowledge preparation.

SageMaker Canvas offers ML knowledge transforms with which you’ll be able to clear, remodel, and put together your knowledge for mannequin constructing. You need to use these transforms in your datasets with none code, and they are going to be added to the mannequin recipe, which is a document of the info preparation carried out in your knowledge earlier than constructing the mannequin.

Observe that any knowledge transforms you employ solely modify the enter knowledge when constructing a mannequin and don’t modify your dataset or unique knowledge supply.

The next transforms can be found in SageMaker Canvas so that you can put together your knowledge for constructing:

- Datetime extraction

- Drop columns

- Filter rows

- Features and operators

- Handle rows

- Rename columns

- Take away rows

- Change values

- Resample time sequence knowledge

Let’s begin by dropping the columns we have now discovered which have little affect on our prediction.

For instance, on this dataset, the telephone quantity is simply the equal of an account quantity—it’s ineffective and even detrimental in predicting different accounts’ chance of churn. Likewise, the client’s state doesn’t affect our mannequin a lot. Let’s take away the Telephone and State columns by unselecting these options below Column identify.

Now, let’s carry out some further knowledge transformation and have engineering.

For instance, we seen in our earlier evaluation that the charged quantity to prospects has a direct affect on churn. Let’s due to this fact create a brand new column that computes the overall fees to our prospects by combining Cost, Minutes, and Requires Day, Eve, Night time, and Intl. To take action, we use the customized formulation in SageMaker Canvas.

Let’s begin by selecting Features, then we add to the components textbox the next textual content:

(Day Calls*Day Cost*Day Minutes)+(Eve Calls*Eve Cost*Eve Minutes)+(Night time Calls*Night time Cost*Night time Minutes)+(Intl Calls*Intl Cost*Intl Minutes)

Give the brand new column a reputation (for instance, Whole Prices), and select Add after the preview has been generated. The mannequin recipe ought to now look as proven within the following screenshot.

When this knowledge preparation is full, we practice a brand new preview mannequin to see if the mannequin improved. Select Preview mannequin once more, and the decrease proper pane will present the progress.

When coaching is completed, it should proceed to recompute the anticipated accuracy, and also will create a brand new column affect evaluation.

And at last, when the entire course of is full, we are able to see the identical pane we noticed earlier however with the brand new preview mannequin accuracy. You’ll be able to discover mannequin accuracy elevated by 0.4% (from 95.6% to 96%).

The numbers within the previous photographs might differ from yours as a result of ML introduces some stochasticity within the course of of coaching fashions, which might result in totally different ends in totally different builds.

Mannequin-centric method to create the mannequin

Canvas gives two choices to construct your fashions:

- Customary construct – Builds the perfect mannequin from an optimized course of the place pace is exchanged for higher accuracy. It makes use of Auto-ML, which automates numerous duties of ML, together with mannequin choice, attempting numerous algorithms related to your ML use case, hyperparameter tuning, and creating mannequin explainability stories.

- Fast construct – Builds a easy mannequin in a fraction of the time in comparison with a normal construct, however accuracy is exchanged for pace. Fast mannequin is beneficial when iterating to extra rapidly perceive the affect of knowledge modifications to your mannequin accuracy.

Let’s proceed utilizing a normal construct method.

Customary construct

As we noticed earlier than, the usual construct builds the perfect mannequin from an optimized course of to maximise accuracy.

The construct course of for our churn mannequin takes round 45 minutes. Throughout this time, Canvas exams lots of of candidate pipelines, choosing the right mannequin. Within the following screenshot, we are able to see the anticipated construct time and progress.

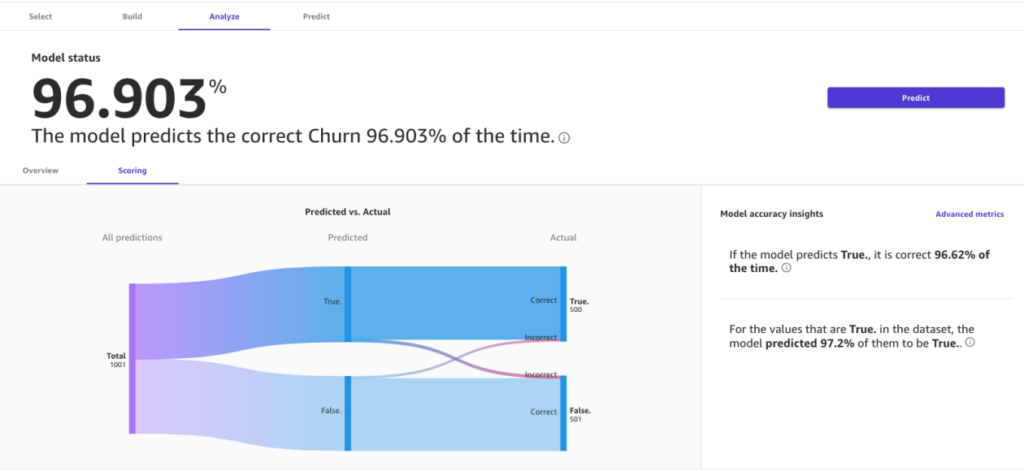

With the usual construct course of, our ML mannequin has improved our mannequin accuracy to 96.903%, which is a major enchancment.

Discover superior metrics

Let’s discover the mannequin utilizing the Superior metrics tab. On the Scoring tab, select Superior metrics.

This web page will present the next confusion matrix collectively with the superior metrics: F1 rating, accuracy, precision, recall, F1 rating, and AUC.

Generate predictions

Now that the metrics look good, we are able to carry out an interactive prediction on the Predict tab, both in a batch or single (real-time) prediction.

Now we have two choices:

- Use this mannequin to run to run batch or single predictions

- Ship the mannequin to Amazon Sagemaker Studio to share with knowledge scientists

Clear up

To keep away from incurring future session charges, sign off of SageMaker Canvas.

Conclusion

SageMaker Canvas offers highly effective instruments that allow you to construct and assess the accuracy of fashions, enhancing their efficiency with out the necessity for coding or specialised knowledge science and ML experience. As we have now seen within the instance by way of the creation of a buyer churn mannequin, by combining these instruments with each a data-centric and a model-centric method utilizing superior metrics, enterprise analysts can create and consider prediction fashions. With a visible interface, you’re additionally empowered to generate correct ML predictions by yourself. We encourage you to undergo the references and see what number of of those ideas may apply in different kinds of ML issues.

References

In regards to the Authors

Marcos is an AWS Sr. Machine Studying Options Architect based mostly in Florida, US. In that position, he’s answerable for guiding and aiding US startup organizations of their technique in direction of the cloud, offering steerage on how you can tackle high-risk points and optimize their machine studying workloads. He has greater than 25 years of expertise with expertise, together with cloud answer improvement, machine studying, software program improvement, and knowledge middle infrastructure.

Marcos is an AWS Sr. Machine Studying Options Architect based mostly in Florida, US. In that position, he’s answerable for guiding and aiding US startup organizations of their technique in direction of the cloud, offering steerage on how you can tackle high-risk points and optimize their machine studying workloads. He has greater than 25 years of expertise with expertise, together with cloud answer improvement, machine studying, software program improvement, and knowledge middle infrastructure.

Indrajit is an AWS Enterprise Sr. Options Architect. In his position, he helps prospects obtain their enterprise outcomes by way of cloud adoption. He designs trendy utility architectures based mostly on microservices, serverless, APIs, and event-driven patterns. He works with prospects to comprehend their knowledge analytics and machine studying targets by way of adoption of DataOps and MLOps practices and options. Indrajit speaks frequently at AWS public occasions like summits and ASEAN workshops, has revealed a number of AWS weblog posts, and developed customer-facing technical workshops centered on knowledge and machine studying on AWS.

Indrajit is an AWS Enterprise Sr. Options Architect. In his position, he helps prospects obtain their enterprise outcomes by way of cloud adoption. He designs trendy utility architectures based mostly on microservices, serverless, APIs, and event-driven patterns. He works with prospects to comprehend their knowledge analytics and machine studying targets by way of adoption of DataOps and MLOps practices and options. Indrajit speaks frequently at AWS public occasions like summits and ASEAN workshops, has revealed a number of AWS weblog posts, and developed customer-facing technical workshops centered on knowledge and machine studying on AWS.